Course: Hadoop Operations

duration: 42 hours |

Language: English (US) |

access duration: 90 days |

Details

In this Hadoop training course you will learn everything about Hadoop clusters. So you will learn how to design clusters and Hadoop in the cloud.

After that you will be familair with deploying and securing Hadoop clusters. At the end of this course you get an introduction about apacity management, cloudera manager and performance tuning of Hadoop clusters. Among the topics covered are EC2, AWS, EMR job, Hive daemons, Install Hadoop, NameNode failure, DataNode, YARN, HDFS, event management, JogHistoryServer logs, performance tuning and far more.

Result

After completing this course you have extensive knowledge from Hadoop from a operations perspective.

Prerequisites

Basic knowledge of cloud computing, big data and databases is a plus.

Target audience

Software Developer, Web Developer, Database Administrators

Content

Hadoop Operations

Designing Hadoop Clusters

- start the course

- describe the principles of supercomputing

- recall the roles and skills needed for the Hadoop engineering team

- recall the advantages and shortcomings of using Hadoop as a supercomputing platform

- describe the three axioms of supercomputing

- describe the dumb hardware and smart software, and the share nothing design principles

- describe the design principles for move processing not data, embrace failure, and build applications not infrastructure

- describe the different rack architectures for Hadoop.

- describe the best practices for scaling a Hadoop cluster.

- recall the best practices for different types of network clusters

- recall the primary responsibilities for the master, data, and edge servers

- recall some of the recommendations for a master server and edge server

- recall some of the recommendations for a data server

- recall some of the recommendations for an operating system

- recall some of the recommendations for hostnames and DNS entries

- describe the recommendations for HDD

- calculate the correct number of disks required for a storage solution

- compare the use of commodity hardware with enterprise disks

- plan for the development of a Hadoop cluster

- set up flash drives as boot media

- set up a kickstart file as boot media

- set up a network installer

- identify the hardware and networking recommendations for a Hadoop cluster

Hadoop in the Cloud

- start the course

- describe how cloud computing can be used as a solution for Hadoop

- recall some of the most come services of the EC2 service bundle

- recall some of the most common services that Amazon offers

- describe how the AWS credentials are used for authentication

- create an AWS account

- describe the use of AWS access keys

- describe AWS identification and access management

- set up AWS IAM

- describe the use of SSH key pairs for remote access

- set up S3 and import data

- provision a micro instance of EC2

- prepare to install and configure a Hadoop cluster on AWS

- create an EC2 baseline server

- create an Amazon machine image

- create an Amazon cluster

- describe what the command line interface is used for

- use the command line interface

- describe the various ways to move data into AWS

- recall the advantages and limitations of using Hadoop in the cloud

- recall the advantages and limitations of using AWS EMR

- describe EMR End-user connections and EMR security levels

- set up an EMR cluster

- run an EMR job from the web console

- run an EMR job with Hue

- run an EMR job with the command line interface

- write an Elastic MapReduce script for AWS

Deploying Hadoop Clusters

- start the course

- describe the configurations management tools

- simulate a configuration management tool

- build an image for a baseline server

- build an image for a DataServer

- build an image for a master server

- provision an admin server

- describe the layout and structure of the Hadoop cluster

- provision a Hadoop cluster

- distribute configuration files and admin scripts

- use init scripts to start and stop a Hadoop cluster

- configure a Hadoop cluster

- configure logging for the Hadoop cluster

- build images for required servers in the Hadoop cluster

- configure a MySQL database

- build the Hadoop clients

- configure Hive daemons

- test the functionality of Flume, Sqoop, HDFS, and MapReduce

- test the functionality of Hive and Pig

- configure Hcatalog daemons

- configure Oozie

- configure Hue and Hue users

- install Hadoop on to the admin server

Hadoop Cluster Availability

- start the course

- describe how Hadoop leverages fault tolerance

- recall the most common causes for NameNode failure

- recall the uses for the Checkpoint node

- test the availability for the NameNode

- describe the operation of the NameNode during a recovery

- swap to a new NameNode

- recall the most common causes for DataNode failure

- test the availability for the DataNode

- describe the operation of the DataNode during a recovery

- set up the DataNode for replication

- identify and recover from a missing data block scenario

- describe the functions of Hadoop high availability

- edit the Hadoop configuration files for high availability

- set up a high availability solution for NameNode

- recall the requirements for enabling an automated failover for the NameNode

- create an automated failover for the NameNode

- recall the most common causes for YARN task failure

- describe the functions of YARN containers

- test YARN container reliability

- recall the most common causes of YARN job failure

- test application reliability

- describe the system view of the Resource Manager configurations set for high availability

- set up high availability for the Resource Manager

- move the Resource Manager HA to alternate master servers

Securing Hadoop Clusters

- start the course

- describe the four pillars of the Hadoop security model

- recall the ports required for Hadoop and how network gateways are used

- install security groups for AWS

- describe Kerberos and recall some of the common commands

- diagram Kerberos and label the primary components

- prepare for a Kerberos installation

- install Kerberos

- configure Kerberos

- describe how to configure HDFS and YARN for use with Kerberos

- configure HDFS for Kerberos

- configure YARN for Kerberos

- describe how to configure Hive for use with Kerberos

- configure Hive for Kerberos

- describe how to configure Pig, Sqoop, and Oozie for use with Kerberos

- configure Pig and HTTPFS for use with Kerberos

- configure Oozie for use with Kerberos

- configure Hue for use with Kerberos

- describe how to configure Flume for use with Kerberos

- describe the security model for users on a Hadoop cluster

- describe the use of POSIX and ACL for managing user access

- create access control lists

- describe how to encrypt data in motion for Hadoop, Sqoop, and Flume

- encrypt data in motion

- describe how to encrypt data at rest

- recall the primary security threats faced by the Hadoop cluster

- describe how to monitor Hadoop security

- configure Hbase for Kerberos

Operating Hadoop Clusters

- start the course

- monitor and improve service levels

- deploy a Hadoop release

- describe the purpose of change management

- describe rack awareness

- write configuration files for rack awareness

- start and stop a Hadoop cluster

- write init scripts for Hadoop

- describe the tools fsck and dfsadmin

- use fsck to check the HDFS file system

- set quotas for the HDFS file system

- install and configure trash

- manage an HDFS DataNode

- use include and exclude files to replace a DataNode

- describe the operations for scaling a Hadoop cluster

- add a DataNode to a Hadoop cluster

- describe the process for balancing a Hadoop cluster

- balance a Hadoop cluster

- describe the operations involved for backing up data

- use distcp to copy data from one cluster to another

- describe MapReduce job management on a Hadoop cluster

- perform MapReduce job management on a Hadoop cluster

- plan an upgrade of a Hadoop cluster

Stabilizing Hadoop Clusters

- start the course

- describe the importance of event management

- describe the importance of incident management

- describe the different methodologies used for root cause analysis

- recall what Ganglia is and what it can be used for

- recall how Ganglia monitors Hadoop clusters

- install Ganglia

- describe Hadoop Metrics2

- install Hadoop Metrics2 for Ganglia

- describe how to use Ganglia to monitor a Hadoop cluster

- use Ganglia to monitor a Hadoop cluster

- recall what Nagios is and what it can be used for

- install Nagios

- manage Nagios contact records

- manage Nagios Push

- use Nagios commands

- use Nagios to monitor a Hadoop cluster

- use Hadoop Metrics2 for Nagios

- describe how to manage logging levels

- describe how to configure Hadoop jobs for logging

- describe how to configure log4j for Hadoop

- describe how to configure JogHistoryServer logs

- configure Hadoop logs

- describe the problem management lifecycle

- recall some of the best practices for problem management

- describe the categories of errors for a Hadoop cluster

- conduct a root cause analysis on a major problem

- use different monitoring tools to identify problems, failures, errors and solutions

Capacity Management for Hadoop Clusters

- start the course

- compare the differences of availability versus performance

- describe different strategies of resource capacity management

- describe how schedulers perform various resource management

- set quotas for the HDFS file system

- recall how to set the maximum and minimum memory allocations per container

- describe how the fair scheduling method allows all applications to get equal amounts of resource time

- describe the primary algorithm and the configuration files for the Fair Scheduler

- describe the default behavior of the Fair Scheduler methods

- monitor the behavior of Fair Share

- describe the policy for single resource fairness

- describe how resources are distributed over the total capacity

- identify different configuration options for single resource fairness

- configure single resource fairness

- describe the minimum share function of the Fair Scheduler

- configure minimum share on the Fair Scheduler

- describe the preemption functions of the Fair Scheduler

- configure preemption for the Fair Scheduler

- describe dominant resource fairness

- write service levels for performance

- use the fail scheduler with multiple users

Performance Tuning of Hadoop Clusters

- start the course

- recall the three main functions of service capacity

- describe different strategies of performance tuning

- list some of the best practices for network tuning

- install compression

- describe the configuration files and parameters used in performance tuning of the operating system

- describe the purpose of Java tuning

- recall some of the rules for tuning the datanode

- describe the configuration files and parameters used in performance tuning of memory for daemons

- describe the purpose of memory tuning for YARN

- recall why the Node Manager kills containers

- performance tune memory for the Hadoop cluster

- describe the configuration files and parameters used in performance tuning of HDFS

- describe the sizing and balancing of the HDFS data blocks

- describe the use of TestDFSIO

- performance tune HDFS

- describe the configuration files and parameters used in performance tuning of YARN

- configure Speculative execution

- describe the configuration files and parameters used in performance tuning of MapReduce

- tune up MapReduce for performance reasons

- describe the practice of benchmarking on a Hadoop cluster

- describe the different tools used for benchmarking a cluster

- perform a benchmark of a Hadoop cluster

- describe the purpose of application modeling

- optimize memory and benchmark a Hadoop cluster

Cloudera Manager and Hadoop Clusters

- start the course

- describe what cluster management entails and recall some of the tools that can be used

- describe different tools from a functional perspective

- describe the purpose and functionality of Cloudera Manager

- install Cloudera Manager

- use Cloudera Manager to deploy a cluster

- use Cloudera Manager to install Hadoop

- describe the different parts of the Cloudera Manager Admin Console

- describe the Cloudera Manager internal architecture

- use Cloudera Manager to manage a cluster

- manage Cloudera Manager's services

- manage hosts with Cloudera Manager

- set up Cloudera Manager for high availability

- user Cloudera Manager to manage resources

- use Cloudera Manager's monitoring features

- manage logs through Cloudera Manager

- improve cluster performance with Cloudera Manager

- install and configure Impala

- install and configure Sentry

- implement security administration using Hive

- perform backups, snapshots, and upgrades using Cloudera Manager

- configure Hue with My SQL

- import data using Hue

- use Hue to run a Hive job

- use Hue to edit Oozie workflows and coordinators

- format HDFS, create an HDFS directory, import data, run a WordCount, and view the results

Course options

We offer several optional training products to enhance your learning experience. If you are planning to use our training course in preperation for an official exam then whe highly recommend using these optional training products to ensure an optimal learning experience. Sometimes there is only a practice exam or/and practice lab available.

Optional practice exam (trial exam)

To supplement this training course you may add a special practice exam. This practice exam comprises a number of trial exams which are very similar to the real exam, both in terms of form and content. This is the ultimate way to test whether you are ready for the exam.

Optional practice lab

To supplement this training course you may add a special practice lab. You perform the tasks on real hardware and/or software applicable to your Lab. The labs are fully hosted in our cloud. The only thing you need to use our practice labs is a web browser. In the LiveLab environment you will find exercises which you can start immediately. The lab enviromentconsist of complete networks containing for example, clients, servers,etc. This is the ultimate way to gain extensive hands-on experience.

Sign In

WHY_ICTTRAININGEN

Via ons opleidingsconcept bespaar je tot 80% op trainingen

Start met leren wanneer je wilt. Je bepaalt zelf het gewenste tempo

Spar met medecursisten en profileer je als autoriteit in je vakgebied.

Ontvang na succesvolle afronding van je cursus het officiële certificaat van deelname van Icttrainingen.nl

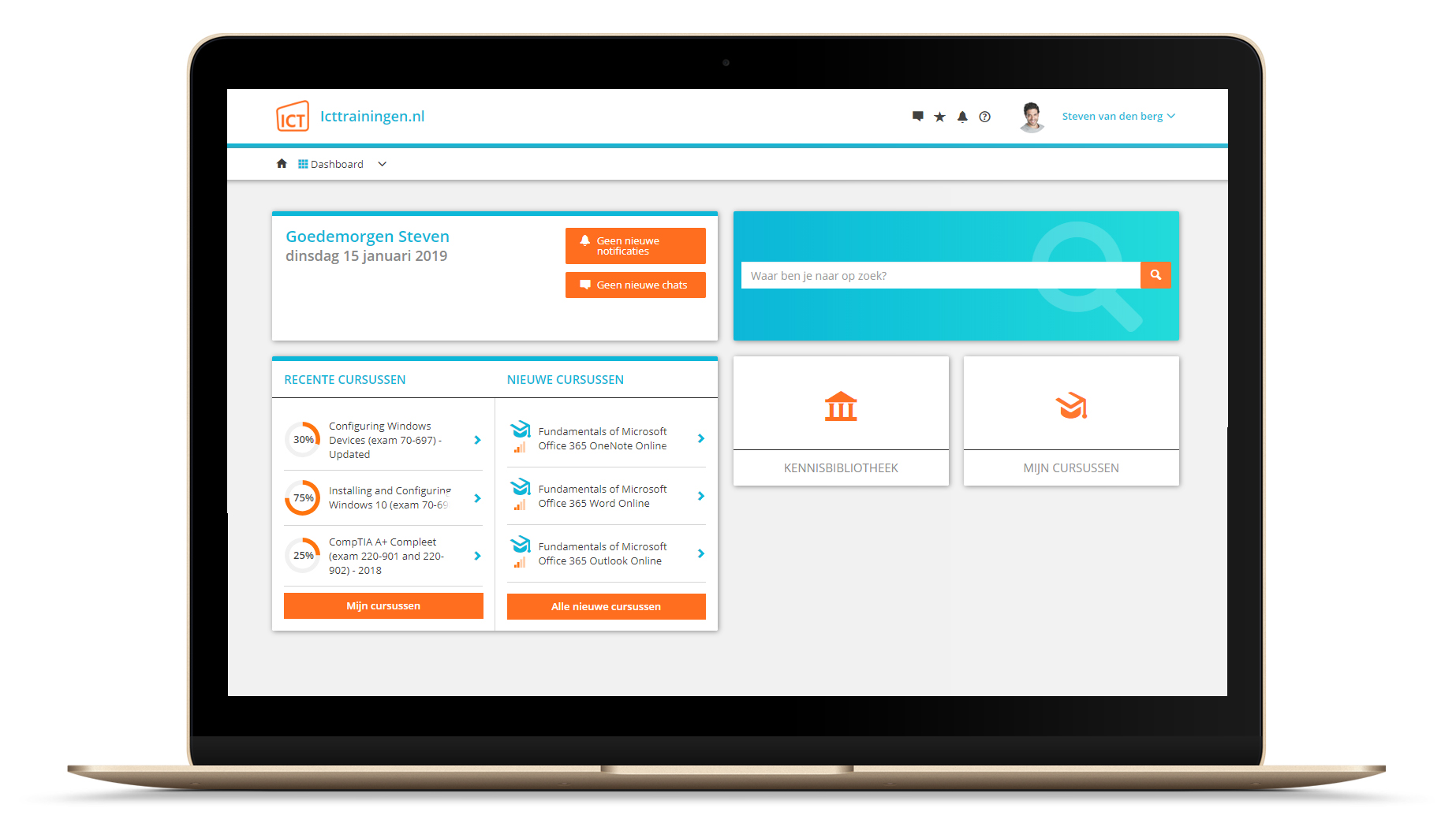

Krijg inzicht in uitgebreide voortgangsinformatie van jezelf of je medewerkers

Kennis opdoen met interactieve e-learning en uitgebreide praktijkopdrachten door gecertificeerde docenten

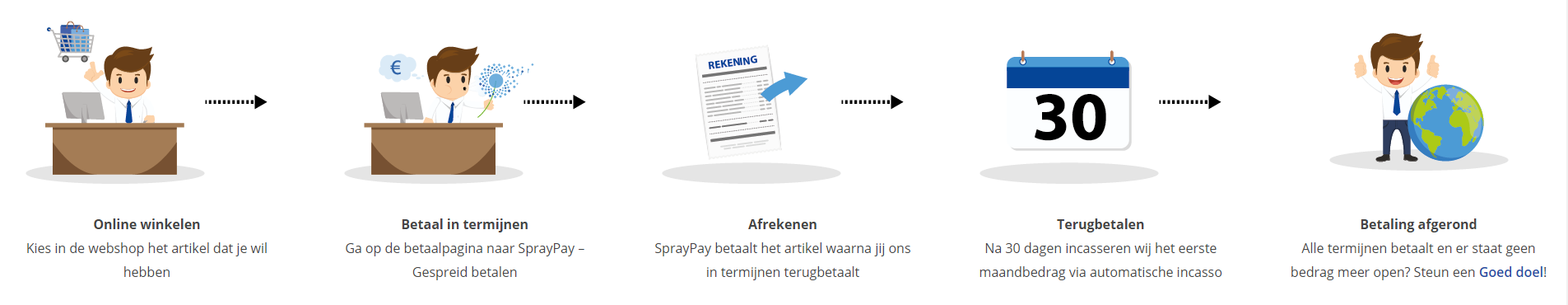

Orderproces

Once we have processed your order and payment, we will give you access to your courses. If you still have any questions about our ordering process, please refer to the button below.

read more about the order process

Een zakelijk account aanmaken

Wanneer u besteld namens uw bedrijf doet u er goed aan om aan zakelijk account bij ons aan te maken. Tijdens het registratieproces kunt u hiervoor kiezen. U heeft vervolgens de mogelijkheden om de bedrijfsgegevens in te voeren, een referentie en een afwijkend factuuradres toe te voegen.

Betaalmogelijkheden

U heeft bij ons diverse betaalmogelijkheden. Bij alle betaalopties ontvangt u sowieso een factuur na de bestelling. Gaat uw werkgever betalen, dan kiest u voor betaling per factuur.

Cursisten aanmaken

Als u een zakelijk account heeft aangemaakt dan heeft u de optie om cursisten/medewerkers aan te maken onder uw account. Als u dus meerdere trainingen koopt, kunt u cursisten aanmaken en deze vervolgens uitdelen aan uw collega’s. De cursisten krijgen een e-mail met inloggegevens wanneer zij worden aangemaakt en wanneer zij een training hebben gekregen.

Voortgangsinformatie

Met een zakelijk account bent u automatisch beheerder van uw organisatie en kunt u naast cursisten ook managers aanmaken. Beheerders en managers kunnen tevens voortgang inzien van alle cursisten binnen uw organisatie.

What is included?

| Certificate of participation | Yes |

| Monitor Progress | Yes |

| Award Winning E-learning | Yes |

| Mobile ready | Yes |

| Sharing knowledge | Unlimited access to our IT professionals community |

| Study advice | Our consultants are here for you to advice about your study career and options |

| Study materials | Certified teachers with in depth knowledge about the subject. |

| Service | World's best service |

Platform

Na bestelling van je training krijg je toegang tot ons innovatieve leerplatform. Hier vind je al je gekochte (of gevolgde) trainingen, kan je eventueel cursisten aanmaken en krijg je toegang tot uitgebreide voortgangsinformatie.

FAQ

Niet gevonden wat je zocht? Bekijk alle vragen of neem contact op.