Course: Natural Language Processing and Large Language Models

duration: 37 hours |

Language: English (US) |

access duration: 180 days |

Details

Are you fascinated by the power of language and how computers can understand and interact with it? This course is designed to equip you with the skills to unlock the potential of NLP for various applications.

First you'll get a solid foundation, equipping you with the core concepts and techniques used in NLP, mastering text preprocessing, representation, and classification. After that, you'll delves into the cutting-edge world of Large Language Models (LLMs), harnessing the power of deep learning and attention mechanisms.

Result

After following this course, you will be able to:

- Explain the basic concepts and techniques of Natural Language Processing (NLP).

- Use techniques such as text preprocessing, representation, and classification.

- Understand the operation of Large Language Models (LLMs).

- Apply the power of deep learning and attention mechanisms in NLP.

Prerequisites

Knowledge of NLP is recommended.

Content

Natural Language Processing and Large Language Models

Fundamentals of NLP: Introducing Natural Language Processing

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that focuses on programmatically working with text or speech - the term ‘natural' here emphasizes that the program must work with and be aware of everyday language, grammar and semantics, rather than structured text data such as might be found in database or string processing. In this course, you will learn about the two main branches of NLP, natural language understanding and natural language generation. You will also explore the Natural Language Toolkit (NLTK) and spaCy, two popular Python libraries for natural language processing and analysis. Next, you will delve into common preprocessing steps for natural language data. This includes cleaning and tokenizing data, removing stopwords from your text, performing stemming and lemmatization, part-of-speech (POS) tagging, and named entity recognition (NER). Finally, you will get set up with your Python environment and libraries for NLP and explore some text corpora that NLTK offers for working with text.

Fundamentals of NLP: Preprocessing Text Using NLTK & SpaCy

Tokenization, stemming, and lemmatization are essential natural language processing (NLP) tasks. Tokenization involves breaking text into units (tokens), such as words or phrases, facilitating analysis. Stemming reduces words to a common base form by removing prefixes or suffixes, promoting simplicity in representation. In contrast, lemmatization considers grammatical aspects to transform words into their base or dictionary form. You will begin this course by tokenizing text using the Natural Language Toolkit (NLTK) and SpaCy, which involves splitting a large block of text into smaller units called tokens, usually words or sentences. You will then remove stopwords, common words such as "a" and "the" that add little meaning to text. Next, you'll explore the WordNet lexical database, which contains information about the semantic relationship between words. You'll use Synsets to view similar words and explore hypernyms, hyponyms, meronyms and holonyms. Finally, you'll compare stemming and lemmatization using NLTK and SpaCy. You will explore both processes with NLTK and perform lemmatization using SpaCy.

Fundamentals of NLP: Rule-based Models for Sentiment Analysis

Sentiment Analysis is a common use-case within the discipline of Natural Language Processing (NLP). Here, a model attempts to understand the contents of a text document well enough to capture the feelings, or sentiments, conveyed by the text. Sentiment Analysis is widely used by political forecasters, marketing professionals, and hedge fund managers looking to spot trends in voter, user, or market behavior. You will start this course by loading and preprocessing your data. You will read in data on movie reviews from IMDB and explore the dataset. You will then visualize the data using histograms and box plots to understand review length distribution. After that, you will perform basic data cleaning on text, utilizing regular expressions to remove elements like URLs and digits. Finally, you will conduct sentiment analysis using the Valence Aware Dictionary and Sentiment Reasoner (VADER) and TextBlob.

Fundamentals of NLP: Representing Text as Numeric Features

When performing sentiment classification using machine learning, it is necessary to encode text into a numeric format because machine learning models can only parse numbers, not text. There are a number of encoding techniques for text data, such as one-hot encoding, count vector encoding, and word embeddings. In this course, you will learn how to use one-hot encoding, a simple technique that builds a vocabulary from all words in your text corpus. Next, you will move on to count vector encoding, which tracks word frequency in each document and explore term frequency-inverse document frequency (TF-IDF) encoding, which also creates vocabularies and document vectors but uses a TF-IDF score to represent words. Finally, you will perform sentiment analysis using encoded text. You will use a count vector to encode your input data and then set up a Gaussian Naïve-Bayes model. You will train the model and evaluate its metrics. You will also explore how to improve the model performance by stemming words, removing stopwords, and using N-grams.

Fundamentals of NLP: Word Embeddings to Capture Relationships in Text

Before training any text-based machine learning model, it is necessary to encode that text into a machine-readable numeric form. Embeddings are the preferred way to encode text, as they capture data about the meaning of text, and are performant even with large vocabularies. You will start this course by working with Word2Vec embeddings, which represent words and terms in feature vector space, capturing the meaning and context of a word in a sentence. You will generate Word2Vec embeddings on your data corpus, set up a Gaussian Naïve-Bayes classification model, and train it on Word2Vec embeddings. Next, you will move on to GloVe embeddings. You will use the pre-trained GloVe word vector embeddings and explore how to view similar words and identify the odd one out in a set. Finally, you will perform classification using many different models, including Naive-Bayes and Random Forest models.

Natural Language Processing Using Deep Learning

Deep learning has revolutionized natural language processing (NLP), offering powerful techniques for understanding, generating, and processing human language. Through deep neural networks (DNNs), NLP models can now comprehend complex linguistic structures, extract meaningful information from vast amounts of text data, and even generate human-like responses. Begin this course by learning how to utilize Keras and TensorFlow to construct and train neural networks. Next, you will build a DNN to classify messages as spam or not. You will find out how to encode data using count vector and term frequency-inverse document frequency (TF-IDF) encodings via the Keras TextVectorization layer. To enhance the training process, you will employ Keras callbacks to gain insights into metrics tracking, TensorBoard integration, and model checkpointing. Finally, you will apply sentiment analysis using word embeddings, explore the use of pre-trained GloVe word vector embeddings, and incorporate convolutional layers to grasp local text context.

Using Recurrent Networks For Natural Language Processing

Recurrent neural networks (RNNs) are a class of neural networks designed to efficiently process sequential data. Unlike traditional feedforward neural networks, RNNs possess internal memory, which enables them to learn patterns and dependencies in sequential data, making them well-suited for a wide range of applications, including natural language processing. In this course, you will explore the mechanics of RNNs and their capacity for processing sequential data. Next, you will perform sentiment analysis with RNNs, generating and visualizing word embeddings through the TensorBoard embedding projector plug-in. You will construct an RNN, employing these word embeddings for sentiment analysis and evaluating the RNN's efficacy on a set of test data. Then, you will investigate advanced RNN applications, focusing on long short-term memory (LSTM) and bidirectional LSTM models. Finally, you will discover how LSTM models enhance the processing of long text sequences and you will build and train a bidirectional LSTM model to process data in both directions and capture a more comprehensive understanding of the text.

Using Out-of-the-Box Transformer Models for Natural Language Processing

Transfer learning is a powerful machine learning technique that involves taking a pre-trained model on a large dataset and fine-tuning it for a related but different task, significantly reducing the need for extensive datasets and computational resources. Transformers are groundbreaking neural network architectures that use attention mechanisms to efficiently process sequential data, enabling state-of-the-art performance in a wide range of natural language processing tasks. In this course, you will discover transfer learning, the TensorFlow Hub, and attention-based models. Then you will learn how to perform subword tokenization with WordPiece. Next, you will examine transformer models, specifically the FNet model, and you will apply the FNet model for sentiment analysis. Finally, you will explore advanced text processing techniques using the Universal Sentence Encoder (USE) for semantic similarity analysis and the Bidirectional Encoder Representations from Transformers (BERT) model for sentence similarity prediction.

Attention-based Models and Transformers for Natural Language Processing

Attention mechanisms in natural language processing (NLP) allow models to dynamically focus on different parts of the input data, enhancing their ability to understand context and relationships within the text. This significantly improves the performance of tasks such as translation, sentiment analysis, and question-answering by enabling models to process and interpret complex language structures more effectively. Begin this course by setting up language translation models and exploring the foundational concepts of translation models, including the encoder-decoder structure. Then you will investigate the basic translation process by building a transformer model based on recurrent neural networks without attention. Next, you will incorporate an attention layer into the decoder of your language translation model. You will discover how transformers process input sequences in parallel, improving efficiency and training speed through the use of positional and word embeddings. Finally, you will learn about queries, keys, and values within the multi-head attention layer, culminating in training a transformer model for language translation.

Final Exam: Natural Language Processing Fundamentals

Final Exam: Natural Language Processing Fundamentals will test your knowledge and application of the topics presented throughout the Natural Language Processing track.

NLP with LLMs: Working with Tokenizers in Hugging Face

Hugging Face, a leading company in the field of artificial intelligence (AI), offers a comprehensive platform that enables developers and researchers to build, train, and deploy state-of-the-art machine learning (ML) models with a strong emphasis on open collaboration and community-driven development. In this course, you will discover the extensive libraries and tools Hugging Face offers, including the Transformers library, which provides access to a vast array of pre-trained models and datasets. Next, you will set up your working environment in Google Colab. You will also explore the critical components of the text preprocessing pipeline: normalizers and pre-tokenizers. Finally, you will master various tokenization techniques, including byte pair encoding (BPE), Wordpiece, and Unigram tokenization, which are essential for working with transformer models. Through hands-on exercises, you will build and train BPE and WordPiece tokenizers, configuring normalizers and pre-tokenizers to fine-tune these tokenization methods.

NLP with LLMs: Hugging Face Classification, QnA, & Text Generation Pipelines

Sentiment analysis, named entity recognition (NER), question answering, and text generation are pivotal tasks in the realm of Natural Language Processing (NLP) that enable machines to interpret and understand human language in a nuanced manner. In this course, you will be introduced to the concept of Hugging Face pipelines, a streamlined approach to applying pre-trained models to a variety of NLP tasks. Through hands-on exploration, you will learn how to classify text using zero-shot classification techniques, perform sentiment analysis with DistilBERT, and apply models to specialized tasks, utilizing the power of NLP to adapt to niche domains. Next, you will discover how to employ models to accurately answer questions based on provided contexts and understand the mechanics behind model-based answers, including their limitations and capabilities. Finally, you will discover various text generation strategies such as greedy search and beam search, learning how to balance predictability with creativity in generated text. You will also explore text generation through sampling techniques and the application of mask filling with BERT models.

NLP with LLMs: Language Translation, Summarization, & Semantic Similarity

Language translation, text summarization, and semantic textual similarity are advanced problems within the field of Natural Language Processing (NLP) that are increasingly solvable due to advances in the use of large language models (LLMs) and pre-trained models. In this course, you will learn to translate text between languages with state-of-the-art pre-trained models such as T5, M2M 100, and Opus. You will also gain insights into evaluating translation accuracy with BLEU scores and explore multilingual translation techniques. Next, you will explore the process of summarizing text, utilizing the powerful BART and T5 models for abstractive summarization. You will see how these models extract and generate key information from large texts and learn to evaluate the quality of summaries using ROUGE scores. Finally, you will master the computation of semantic textual similarity using sentence transformers and apply clustering techniques to group texts based on their semantic content. You will also learn to compute embeddings and measure similarity directly.

NLP with LLMs: Fine-tuning Models for Classification & Question Answering

Fine-tuning in the context of text-based models refers to the process of taking a pre-trained model and adapting it to a specific task or dataset with additional training. This technique leverages the general language understanding capabilities acquired by the model during its initial extensive training on a large corpus of text and refines its abilities to perform well on a more narrowly defined task or domain-specific data. In this course, you will learn how to fine-tune a model for sentiment analysis, starting with the preparation of datasets optimized for this purpose. You will be guided through setting up your computing environment and preparing a BERT classifier for sentiment analysis. Next, you will discover how to structure text data and align named entity recognition (NER) tags with subword tokenization. You will build on this knowledge to fine-tune a BERT model specifically for NER, training it to accurately identify and classify entities within text. Finally, you will explore the domain of question answering, learning to handle the challenges of long contexts to extract precise answers from extensive texts. You will prepare QnA data for fine-tuning and utilize a DistilBERT model to create an effective QnA system.

NLP with LLMs: Fine-tuning Models for Language Translation, & Summarization

Causal language modeling (CLM), text translation, and summarization demonstrate the versatility and depth of language understanding and generation by artificial intelligence (AI). Fine-tuning models help improve the performance of models for these specific tasks. In this course, you will explore CLM with DistilGPT-2 and masked language modeling (MLM) with DistilRoBERTa, learning how to prepare, process, and fine-tune models for generating and predicting text. Next, you will dive into the nuances of language translation, focusing on translating English to Spanish. You will prepare and evaluate training data and learn to use BLEU scores for assessing translation quality. You will fine-tune a pre-trained T5-small model, enhancing its accuracy and broadening its linguistic capabilities. Finally, you will explore the intricacies of text summarization. Starting with data loading and visualization, you will establish a benchmark using the pre-trained T5-small model. You will then fine-tune this model for summarization tasks, learning to condense extensive texts into succinct summaries.

Final Exam: Architecting LLMs for Your Technical Solutions

Final Exam: Natural Language Processing Fundamentals will test your knowledge and application of the topics presented throughout the Natural Language Processing track.

Course options

We offer several optional training products to enhance your learning experience. If you are planning to use our training course in preperation for an official exam then whe highly recommend using these optional training products to ensure an optimal learning experience. Sometimes there is only a practice exam or/and practice lab available.

Optional practice exam (trial exam)

To supplement this training course you may add a special practice exam. This practice exam comprises a number of trial exams which are very similar to the real exam, both in terms of form and content. This is the ultimate way to test whether you are ready for the exam.

Optional practice lab

To supplement this training course you may add a special practice lab. You perform the tasks on real hardware and/or software applicable to your Lab. The labs are fully hosted in our cloud. The only thing you need to use our practice labs is a web browser. In the LiveLab environment you will find exercises which you can start immediately. The lab enviromentconsist of complete networks containing for example, clients, servers,etc. This is the ultimate way to gain extensive hands-on experience.

Sign In

WHY_ICTTRAININGEN

Via ons opleidingsconcept bespaar je tot 80% op trainingen

Start met leren wanneer je wilt. Je bepaalt zelf het gewenste tempo

Spar met medecursisten en profileer je als autoriteit in je vakgebied.

Ontvang na succesvolle afronding van je cursus het officiële certificaat van deelname van Icttrainingen.nl

Krijg inzicht in uitgebreide voortgangsinformatie van jezelf of je medewerkers

Kennis opdoen met interactieve e-learning en uitgebreide praktijkopdrachten door gecertificeerde docenten

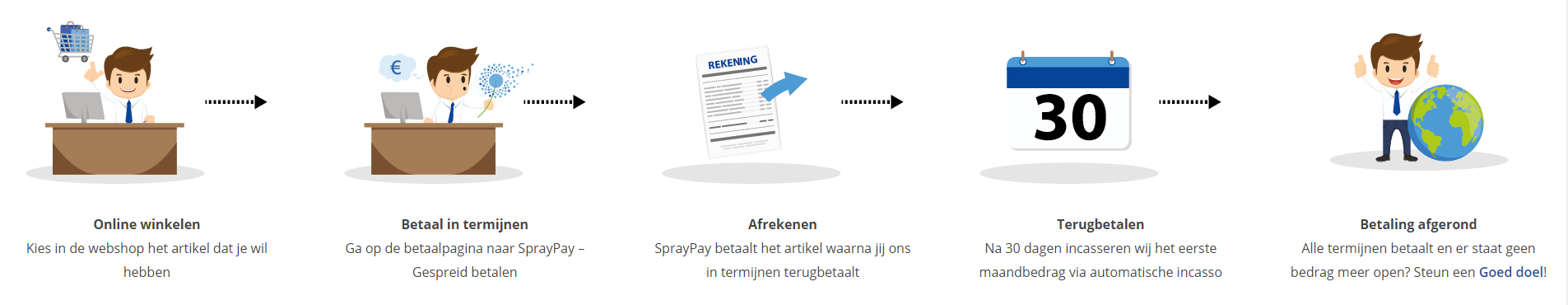

Orderproces

Once we have processed your order and payment, we will give you access to your courses. If you still have any questions about our ordering process, please refer to the button below.

read more about the order process

Een zakelijk account aanmaken

Wanneer u besteld namens uw bedrijf doet u er goed aan om aan zakelijk account bij ons aan te maken. Tijdens het registratieproces kunt u hiervoor kiezen. U heeft vervolgens de mogelijkheden om de bedrijfsgegevens in te voeren, een referentie en een afwijkend factuuradres toe te voegen.

Betaalmogelijkheden

U heeft bij ons diverse betaalmogelijkheden. Bij alle betaalopties ontvangt u sowieso een factuur na de bestelling. Gaat uw werkgever betalen, dan kiest u voor betaling per factuur.

Cursisten aanmaken

Als u een zakelijk account heeft aangemaakt dan heeft u de optie om cursisten/medewerkers aan te maken onder uw account. Als u dus meerdere trainingen koopt, kunt u cursisten aanmaken en deze vervolgens uitdelen aan uw collega’s. De cursisten krijgen een e-mail met inloggegevens wanneer zij worden aangemaakt en wanneer zij een training hebben gekregen.

Voortgangsinformatie

Met een zakelijk account bent u automatisch beheerder van uw organisatie en kunt u naast cursisten ook managers aanmaken. Beheerders en managers kunnen tevens voortgang inzien van alle cursisten binnen uw organisatie.

What is included?

| Certificate of participation | Yes |

| Monitor Progress | Yes |

| Award Winning E-learning | Yes |

| Mobile ready | Yes |

| Sharing knowledge | Unlimited access to our IT professionals community |

| Study advice | Our consultants are here for you to advice about your study career and options |

| Study materials | Certified teachers with in depth knowledge about the subject. |

| Service | World's best service |

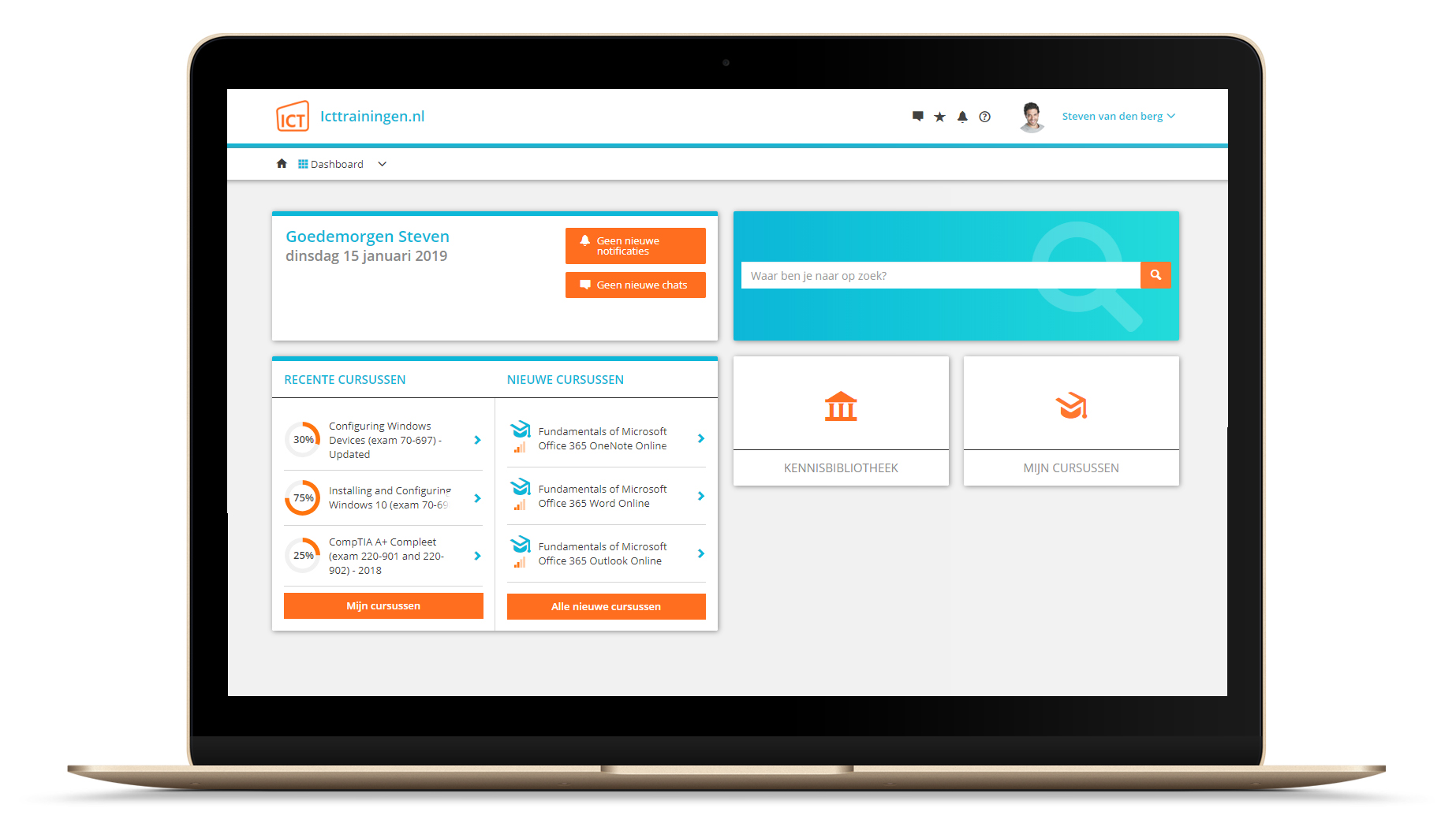

Platform

Na bestelling van je training krijg je toegang tot ons innovatieve leerplatform. Hier vind je al je gekochte (of gevolgde) trainingen, kan je eventueel cursisten aanmaken en krijg je toegang tot uitgebreide voortgangsinformatie.

FAQ

Niet gevonden wat je zocht? Bekijk alle vragen of neem contact op.