Course: Processing Data with Apache Kafka

duration: 11 hours |

Language: English (US) |

access duration: 180 days |

Details

This course introduces you to Apache Kafka. Apache Kafka is an event streaming platform used by Fortune 100 companies. You will explore the characteristics of event streaming and how the KAFKA architecture enables scalable streaming of data. This course also focuses on integrating Python applications with a Kafka environment, implementing consumer groups and customizing Kafka configurations.

Apache Spark is a distributed data processing engine that can process petabytes of data by aggregating and distributing that data across a cluster of resources. This course covers Spark's structured streaming engine.

It also covers the following:

- Installing Kafka and creating topics.

- Creating brokers and a cluster of nodes to handle messages and store their replicas.

- Connecting to Kafka from within Python.

- Various ways to optimize Kafka's performance, using configurations for brokers and topics, and for producer and consumer apps.

- Spark's structured streaming engine, including components such as PySpark shell.

- Building Spark applications that process data streamed to Kafka topics using DataFrames.

- You will discover Apache Cassandra and learn the steps required to interface Spark with this wide-column database.

Result

After completing this course, you will be familiar with Apache Kafka, Apache Spark and Apache Cassandra. You will recognize the characteristics of event streaming and know how to integrate Python applications with a Kafka environment.

Prerequisites

You have at least basic knowledge of data processing.

Target audience

Software Developer, Data analist

Content

Processing Data with Apache Kafka

Processing Data: Getting Started with Apache Kafka

Apache Kafka is a popular event streaming platform used by

- Fortune 100 companies for both real-time and batch data processing.

- In this course, you will explore the characteristics of event

- streaming and how Kafka architecture allows for scalable streaming

- data. Install Kafka and create some topics, which are essentially

- channels of communication between apps and data. Set up and work

- with multiple topics for durable storage. Create multiple brokers

- and cluster of nodes to handle messages and store their replicas.

- Then, monitor the settings and logs for those brokers. Finally, see

- how topic partitions and replicas provide redundancy and maintain

- high availability.

Processing Data: Integrating Kafka with Python & Using Consumer Groups

Producers and consumers are applications that write events to

- and read events from Kafka. In this course, you will focus on

- integrating Python applications with a Kafka environment,

- implementing consumer groups, and tweaking Kafka configurations.

- Begin by connecting to Kafka from Python. You will produce to and

- consume messages from a Kafka topic using Python. Next, discover

- how to tweak Kafka broker configurations. You will place limits on

- the size of messages and disable deletion of topics. Then, publish

- messages to partitioned topics and explore the use of partitioning

- algorithms to determine the placement of messages on partitions.

- Explore consumer groups, which allow a set of consumers to process

- messages published to partitioned Kafka topics in parallel -

- without any duplication of effort. Finally, learn different ways to

- optimize Kafka's performance, using configurations for brokers and

- topics, as well as producer and consumer apps.

Processing Data: Introducing Apache Spark

Apache Spark is a powerful distributed data processing engine

- that can handle petabytes of data by chunking that data and

- dividing across a cluster of resources. In this course, explore

- Spark’s structured streaming engine, including components like

- PySpark shell. Begin by downloading and installing Apache Spark.

- Then create a Spark cluster and run a job from the PySpark shell.

- Monitor an application and job runs from the Spark web user

- interface. Then, set up a streaming environment, reading and

- manipulating the contents of files that are added to a folder in

- real-time. Finally, run apps on both Spark standalone and local

- modes.

Processing Data: Integrating Kafka with Apache Spark

Flexible and Intuitive, DataFrames are a popular data structure

- in data analytics. In this course, build Spark applications that

- process data streamed to Kafka topics using DataFrames. Begin by

- setting up a simple Spark app that streams in messages from a Kafka

- topic, processes and transforms them, and publishes them to an

- output sink. Next, leverage the Spark DataFrame application

- programming interface by performing selections, projections, and

- aggregations on data streamed in from Kafka, while also exploring

- the use of SQL queries for those transformations. Finally, you will

- perform windowing operations - both tumbling windows, where the

- windows do not overlap, and sliding windows, where there is some

- overlapping of data.

Processing Data: Using Kafka with Cassandra & Confluent

Apache Cassandra is a trusted open-source NoSQL distributed

- database that easily integrates with Apache Kafka as part of an ETL

- pipeline. This course focuses on that integration of Kafka, Spark

- and Cassandra and explores a managed version of Kafka with the

- Confluent data streaming platform. Begin by integrating Kafka with

- Apache Cassandra as part of an ETL pipeline involving a Spark

- application. Discover Apache Cassandra and learn the steps involved

- in linking Spark with this wide-column database. Next, examine the

- various features of the Confluent platform and find out how easy it

- is to set up and work with a Kafka environment. After completing

- this course, you will be prepared to implement and manage steam

- processing systems in your organization.

Course options

We offer several optional training products to enhance your learning experience. If you are planning to use our training course in preperation for an official exam then whe highly recommend using these optional training products to ensure an optimal learning experience. Sometimes there is only a practice exam or/and practice lab available.

Optional practice exam (trial exam)

To supplement this training course you may add a special practice exam. This practice exam comprises a number of trial exams which are very similar to the real exam, both in terms of form and content. This is the ultimate way to test whether you are ready for the exam.

Optional practice lab

To supplement this training course you may add a special practice lab. You perform the tasks on real hardware and/or software applicable to your Lab. The labs are fully hosted in our cloud. The only thing you need to use our practice labs is a web browser. In the LiveLab environment you will find exercises which you can start immediately. The lab enviromentconsist of complete networks containing for example, clients, servers,etc. This is the ultimate way to gain extensive hands-on experience.

Sign In

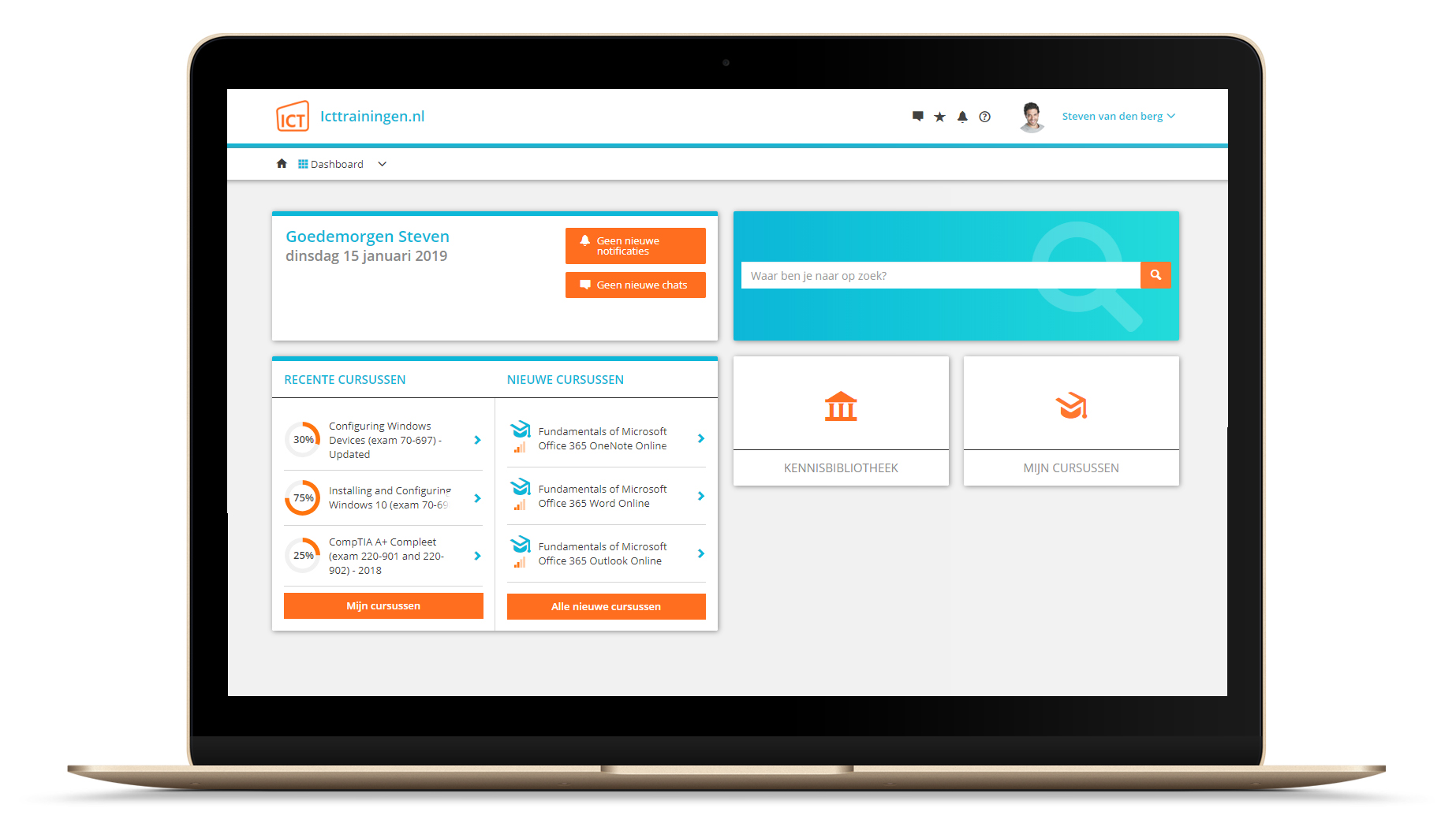

WHY_ICTTRAININGEN

Via ons opleidingsconcept bespaar je tot 80% op trainingen

Start met leren wanneer je wilt. Je bepaalt zelf het gewenste tempo

Spar met medecursisten en profileer je als autoriteit in je vakgebied.

Ontvang na succesvolle afronding van je cursus het officiële certificaat van deelname van Icttrainingen.nl

Krijg inzicht in uitgebreide voortgangsinformatie van jezelf of je medewerkers

Kennis opdoen met interactieve e-learning en uitgebreide praktijkopdrachten door gecertificeerde docenten

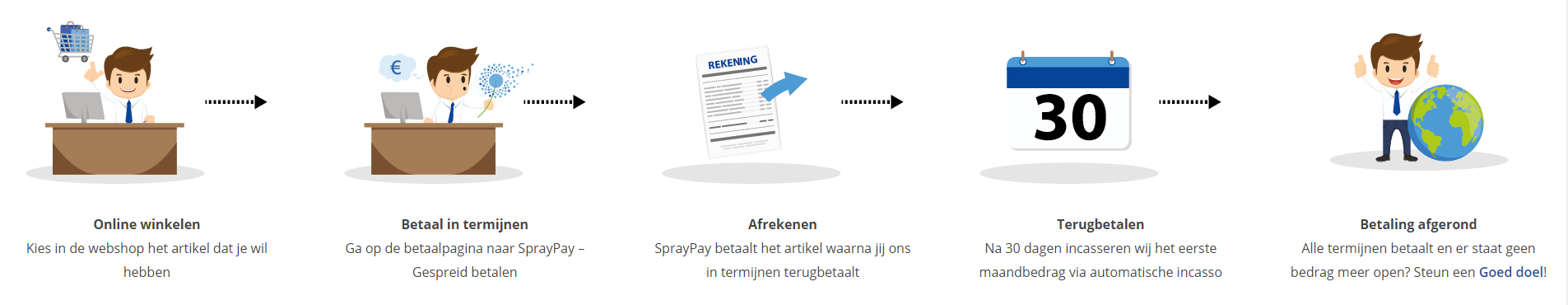

Orderproces

Once we have processed your order and payment, we will give you access to your courses. If you still have any questions about our ordering process, please refer to the button below.

read more about the order process

Een zakelijk account aanmaken

Wanneer u besteld namens uw bedrijf doet u er goed aan om aan zakelijk account bij ons aan te maken. Tijdens het registratieproces kunt u hiervoor kiezen. U heeft vervolgens de mogelijkheden om de bedrijfsgegevens in te voeren, een referentie en een afwijkend factuuradres toe te voegen.

Betaalmogelijkheden

U heeft bij ons diverse betaalmogelijkheden. Bij alle betaalopties ontvangt u sowieso een factuur na de bestelling. Gaat uw werkgever betalen, dan kiest u voor betaling per factuur.

Cursisten aanmaken

Als u een zakelijk account heeft aangemaakt dan heeft u de optie om cursisten/medewerkers aan te maken onder uw account. Als u dus meerdere trainingen koopt, kunt u cursisten aanmaken en deze vervolgens uitdelen aan uw collega’s. De cursisten krijgen een e-mail met inloggegevens wanneer zij worden aangemaakt en wanneer zij een training hebben gekregen.

Voortgangsinformatie

Met een zakelijk account bent u automatisch beheerder van uw organisatie en kunt u naast cursisten ook managers aanmaken. Beheerders en managers kunnen tevens voortgang inzien van alle cursisten binnen uw organisatie.

What is included?

| Certificate of participation | Yes |

| Monitor Progress | Yes |

| Award Winning E-learning | Yes |

| Mobile ready | Yes |

| Sharing knowledge | Unlimited access to our IT professionals community |

| Study advice | Our consultants are here for you to advice about your study career and options |

| Study materials | Certified teachers with in depth knowledge about the subject. |

| Service | World's best service |

Platform

Na bestelling van je training krijg je toegang tot ons innovatieve leerplatform. Hier vind je al je gekochte (of gevolgde) trainingen, kan je eventueel cursisten aanmaken en krijg je toegang tot uitgebreide voortgangsinformatie.

FAQ

Niet gevonden wat je zocht? Bekijk alle vragen of neem contact op.